Can AI understand culture, biology and irony? Four research projects receive millions in funding

Four new research projects based at the Department of Computer Science have received grants totalling 24 million Danish kroner from the Independent Research Fund Denmark. The projects range from basic research into the inner mechanisms and cultural understanding of large language models to the development of reliable and usable AI models in biology.

Can language models learn to understand culture and irony? How do language models actually arrive at the answers they give? Is it possible to develop more reliable AI models in biology? And can we get a computer to show how genes behave in biological processes?

These are the topics of four new research projects with artificial intelligence as their common denominator from the Department of Computer Science at the University of Copenhagen, which in 2025 received funding from the Independent Research Fund Denmark (DFF).

- As one of Denmark's strongest academic environments within artificial intelligence research, our researchers are exploring and pushing the boundaries of what artificial intelligence can do. It is a great recognition that the DFF supports our ambition to develop AI that is not only advanced but also reliable. At the same time, it contributes to the University of Copenhagen's responsibility to influence the development of AI for the benefit of society, says Ken Friis Larsen, Head of Department.

Three of the projects have received a so-called thematic grant specifically for research into artificial intelligence, where the success rate is only 6% measured by the number of applications. Since 2018, the DFF has awarded research grants within politically determined themes, which are financed by annual political agreements on the distribution of the research reserve.

The fourth grant is one of the classic grants from DFF that supports free, groundbreaking research. The grants go to talented researchers who are given the opportunity to pursue their research ideas.

Read more about the four projects below.

CuRe: Cultural Reasoning for Responsible Language Model Development

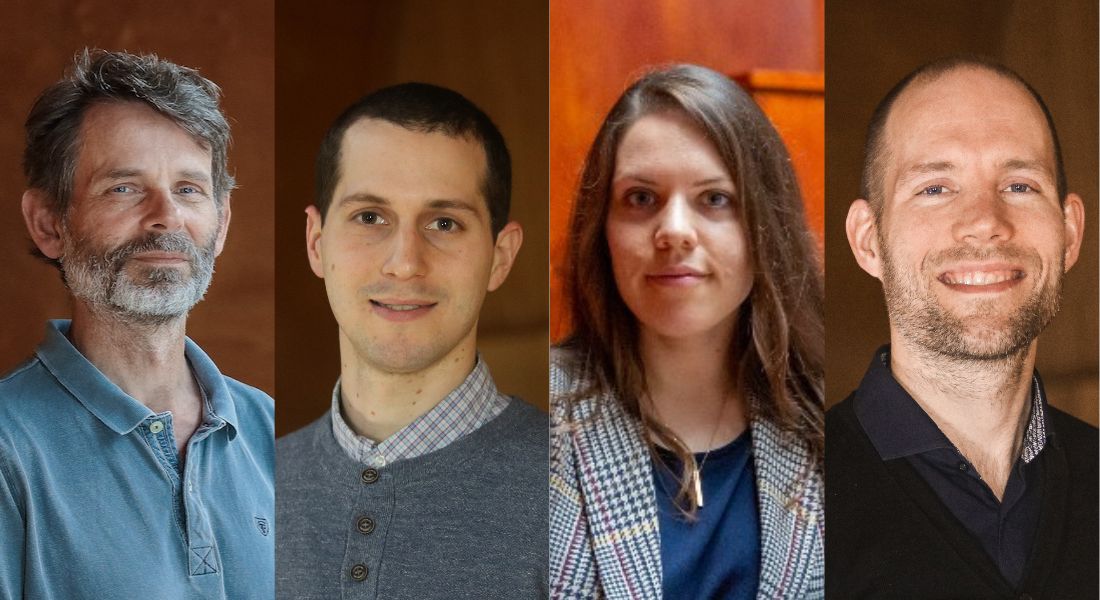

Principal investigator: Tenure-track Assistant Professor Daniel Herscovich

Co-principal investigator: Associate Professor Jens Bjerring-Hansen (Department of Nordic Studies and Linguistics)

Grant amount: DKK 7,199,998

Abstract:

This project develops new methods for evaluating and improving cultural reasoning in language models using literary texts. While current AI systems can retrieve facts, they struggle with interpretive tasks such as irony, perspective, and cultural references—especially in underrepresented languages like Danish. CuRe addresses this gap by creating soft, human-annotated benchmarks grounded in literary analysis, and by testing models such as retrieval-augmented generation (RAG) systems on these tasks. The project integrates insights from NLP, literary studies, and humanistic evaluation theory, advancing culturally responsible AI. It involves partners at UC Berkeley, McGill, and the University of Copenhagen, and contributes to international efforts in fair and explainable language technologies.

Human-Centered Explainable Retrieval-Augmented LLMs

Principal investigator: Professor Isabelle Augenstein

Co-principal investigator: Professor Irina Shklovski

Grant amount: DKK 7,196,132 kr.

Abstract:

Despite their high performance, large language models (LLMs) exhibit many factual errors. Additional documents are commonly retrieved to increase an LLM's knowledge, but an LLM may ignore these documents when generating text. There is limited work to illuminate these inner workings to users and to promote interventions and a more in-depth understanding of these issues. This project will develop explainable AI methods to increase fact-based learning education and applicability to information retrieval by enabling physicians to justify generated output and enabling interventions based on applied evidence. It will generate new insights into strategic evidence gathering and knowledge management, and what users consider to be key factors for critical evaluation of LLM output.

TRANSFORMBIO: Tractable Neuro-Symbolic Foundations for Reliable Models in Biology

Principal investigator: Professor Wouter Boomsma

Co-principal investigator: Dr. Antonio Vergari (University of Edinburgh)

Grant amount: DKK 6,572,380

Abstract:

Large AI models are transforming biology. For instance for proteins, language and structure-to-sequence models have fundamentally altered the analysis of evolutionary patterns and design of new variants. However, critical prediction tasks in biology remain out-of-reach for AI because we lack principled ways to enforce constraints, such as codon structure in gene annotation or structural constraints from knowledge graphs. Neuro-symbolic (NeSy) machine learning offers a promising solution by combining neural networks’ expressiveness with symbolic AI’s reasoning. Yet symbolic methods often lack scalability and are hard to integrate with large neural models. We propose a NeSy framework enabling tractable inference, including exact conditioning, to produce flexible, biologically grounded predictions. By extending tractable NeSy models such as circuits and integrating them with biomolecular foundation models, we aim to create robust, interpretable and biologically faithful systems.

Demystifying Gene Regulatory Networks with Deep Generative Models

Principal investigator: Professor Anders Krogh

Participant: Research Assistant Adrián Sousa-Poza will be employed as a PhD student

Grant amount: DKK 3,024,592

Abstract:

This project aims to develop deep generative models that understand gene regulatory networks (GRNs) and predict cellular responses to perturbations of gene expression. By training on single-cell data, these models will learn regulatory mechanisms and be able to predict unseen cell perturbations. The research builds upon the Deep Generative Decoder (DGD) framework developed by the Krogh group combined with novel deep learning methods like transformers and diffusion models. The project addresses key challenges, including data limitations, interpretability gaps, and standardisation needs. Through collaboration, we will have access to unique multi-omic and time series datasets crucial for model validation. This work advances in silico perturbation modelling by integrating multi-omics data and leveraging GRN information, potentially reducing reliance on costly experiments and contributing to more personalised treatment approaches for complex diseases.

Contact

Caroline Wistoft

Communications Advisor

University of Copenhagen

cawi@adm.ku.dk